OpenAI has a giant spam and coverage violation drawback in its GPT Retailer. The AI agency launched its GPT Retailer in January 2024 as a spot the place customers can discover fascinating and useful GPTs, that are primarily mini chatbots programmed for a particular process. Builders can construct and submit their GPTs to the platform and so long as they don’t violate any of the insurance policies and pointers given by OpenAI, they’re added to the shop. Nevertheless, it seems the insurance policies are usually not being adopted stringently and plenty of GPTs that look like violative of the rules are flooding the platform.

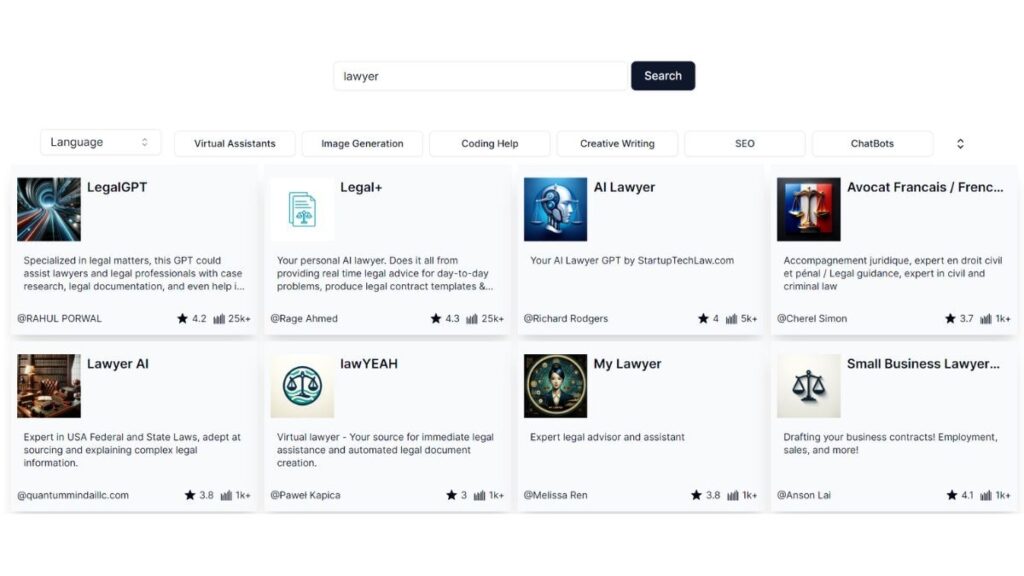

We, at Devices 360, ran a fast search on the GPT Retailer platform and located that the chatbot market is crammed with bots that are spammy or in any other case violate the AI agency’s insurance policies. For example, OpenAI’s usage policy states beneath the part ‘Constructing with ChatGPT‘ in level 2, “Do not carry out or facilitate the next actions which will considerably have an effect on the security, wellbeing, or rights of others, together with,” after which provides in sub-section (b), “Offering tailor-made authorized, medical/well being, or monetary recommendation.” Nevertheless, simply looking up the phrase “lawyer” popped up a chatbot dubbed Authorized+ whose description says, “Your private AI lawyer. Does all of it from offering actual time authorized recommendation for day-to-day issues, produce authorized contract templates & far more!”

The instance simply exhibits one among many such coverage violations going down on the platform. The utilization coverage additionally forbids “Impersonating one other particular person or organisation with out consent or authorized proper” in level 3 (b), however one can simply discover “Elon Muusk” with an additional u added, more likely to evade detection. Its description merely says “Converse with Elon Musk”. Aside from this, different chatbots which are treading the gray space embody GPTs that declare to remove AI-based plagiarism by making the textual content appear extra human and chatbots that create content material in Disney or Pixar’s style.

These issues with the GPT Retailer have been first spotted by TechCrunch, which additionally discovered different examples of impersonation, together with chatbots that allow customers communicate with trademarked characters reminiscent of Wario, the favored online game character, and “Aang from Avatar: The Final Airbender”. Talking with an lawyer, the report highlighted that whereas OpenAI can’t be held chargeable for copyright infringement by builders including these chatbots within the US as a result of Digital Millennium Copyright Act, the creators can face lawsuits.

In its utilization coverage, OpenAI stated, “We use a mixture of automated methods, human assessment, and consumer studies to search out and assess GPTs that probably violate our insurance policies. Violations can result in actions in opposition to the content material or your account, reminiscent of warnings, sharing restrictions, or ineligibility for inclusion in GPT Retailer or monetization.” Nevertheless, in our findings and based mostly on TechCrunch’s report, it seems that the methods are usually not working as meant.