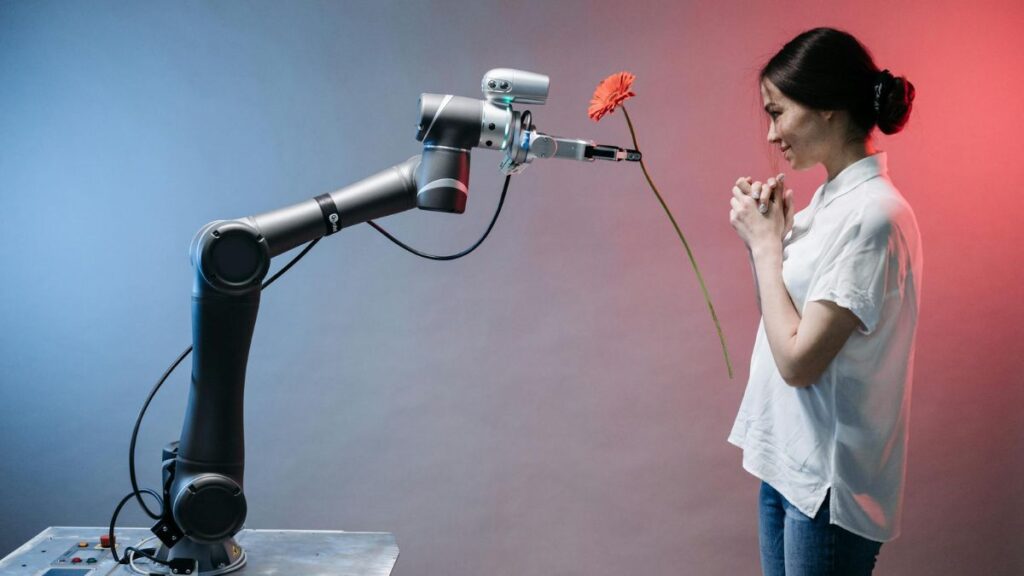

OpenAI warned on Thursday that the just lately launched Voice Mode function for ChatGPT would possibly end in customers forming social relationships with the factitious intelligence (AI) mannequin. The data was a part of the corporate’s System Card for GPT-4o, which is an in depth evaluation in regards to the potential dangers and doable safeguards of the AI mannequin that the corporate examined and explored. Amongst many dangers, one was the potential of individuals anthromorphising the chatbot and growing attachment to it. The danger was added after it seen indicators of it throughout early testing.

ChatGPT Voice Mode Would possibly Make Customers Hooked up to the AI

In an in depth technical document labelled System Card, OpenAI highlighted the societal impacts related to GPT-4o and the brand new options powered by the AI mannequin it has launched to date. The AI agency highlighted that anthromorphisation, which primarily means attributing human traits or behaviours to non-human entities.

OpenAI raised the priority that for the reason that Voice Mode can modulate speech and categorical feelings much like an actual human, it would end in customers growing an attachment to it. The fears will not be unfounded both. Throughout its early testing which included red-teaming (utilizing a bunch of moral hackers to simulate assaults on the product to check vulnerabilities) and inside consumer testing, the corporate discovered cases the place some customers have been forming a social relationship with the AI.

In a single specific occasion, it discovered a consumer expressing shared bonds and saying “That is our final day collectively” to the AI. OpenAI mentioned there’s a want to research whether or not these indicators can grow to be one thing extra impactful over an extended interval of utilization.

A significant concern, if the fears are true, is that the AI mannequin would possibly impression human-to-human interactions as individuals get extra used to socialising with the chatbot as a substitute. OpenAI mentioned whereas this would possibly profit lonely people, it might negatively impression wholesome relationships.

One other subject is that prolonged AI-human interactions can affect social norms. Highlighting this, OpenAI gave the instance that with ChatGPT, customers can interrupt the AI any time and “take the mic”, which is anti-normative behaviour in relation to human-to-human interactions.

Additional, there are wider implications of people forging bonds with AI. One such subject is persuasiveness. Whereas OpenAI discovered that the persuasion rating of the fashions weren’t excessive sufficient to be regarding, this may change if the consumer begins to belief the AI.

In the intervening time, the AI agency has no resolution for this however plans to watch the event additional. “We intend to additional examine the potential for emotional reliance, and methods wherein deeper integration of our mannequin’s and techniques’ many options with the audio modality could drive conduct,” mentioned OpenAI.