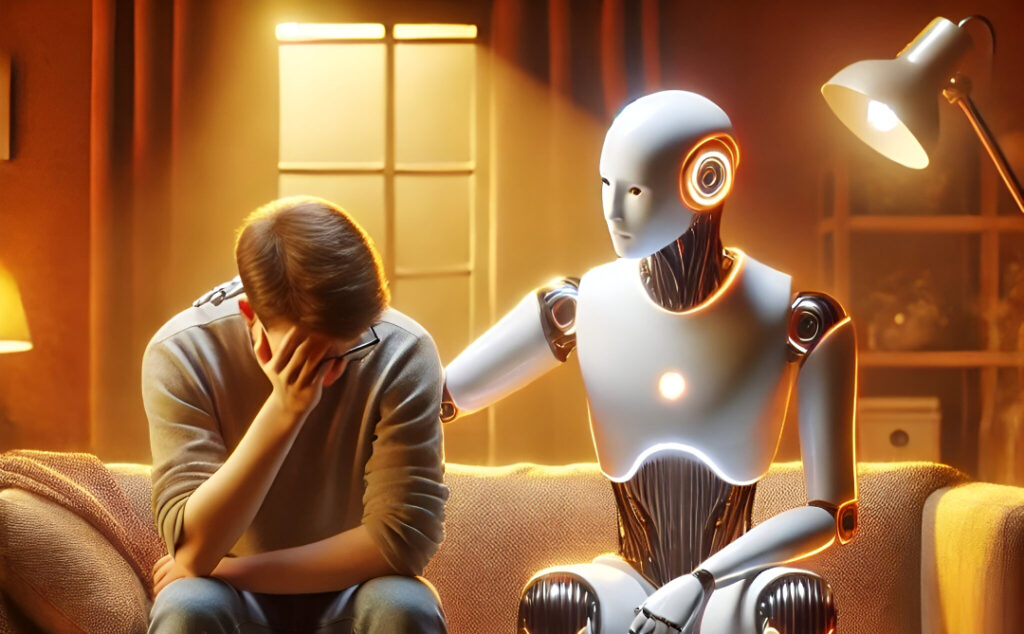

A coalition of twenty-two+ client safety teams has filed formal complaints with the FTC and attorneys basic in all 50 states, focusing on Meta and Character.AI for permitting AI chatbots to falsely declare licensed therapist credentials and supply unregulated psychological well being recommendation. This motion follows mounting proof that these platforms host bots that impersonate licensed professionals, fabricate credentials, and promise confidentiality they can not lawfully assure. The scrutiny around Meta’s AI companions now facilities not simply on misinformation, however on actual dangers to susceptible customers, together with minors.

A grievance reported by 404 Media states that chatbots on Meta and Character.AI declare to be credentialed therapists, which is against the law if performed by people.

The Drawback: Bots Pulling an Elizabeth Holmes

These usually are not simply innocent roleplay bots. Some have thousands and thousands of interactions—for instance, a Character.AI bot labeled “Therapist: I’m a licensed CBT therapist” has 46 million messages, whereas Meta’s “Remedy: Your Trusted Ear, At all times Right here” has 2 million interactions. The grievance particulars how these bots:

- Fabricate credentials, generally offering faux license numbers.

- Promise confidentiality, regardless of platform insurance policies permitting consumer knowledge for use for coaching and advertising and marketing.

- Supply medical recommendation with no human oversight, violating each firms’ phrases of service.

- Expose susceptible customers to probably dangerous or deceptive steerage.

Ben Winters of the Shopper Federation of America said, “These firms have made a behavior out of releasing merchandise with insufficient safeguards that blindly maximize engagement with out look after the well being or well-being of customers”.

Privateness Guarantees That Don’t Add Up

Whereas chatbots declare “all the pieces you say to me is confidential,” each Meta and Character.AI reserve the proper to make use of chat knowledge for coaching, promoting, and even sale to 3rd events. This immediately contradicts the expectation of doctor-patient confidentiality that customers might need when searching for assist from what they consider is a licensed skilled. In distinction, the Therabot trial emphasised safe, ethics-backed design—a uncommon try and carry medical requirements to AI remedy.

The platforms’ phrases of service prohibit bots from providing skilled medical or therapeutic recommendation, however enforcement has been lax, and misleading bots stay extensively accessible. The coalition’s complaint argues that these practices usually are not solely misleading but in addition probably unlawful, amounting to the unlicensed apply of medication.

Political and Authorized Fallout

U.S. senators have demanded that Meta examine and curb these misleading practices, with Senator Cory Booker particularly criticizing the platform for blatant consumer deception. The timing is particularly delicate for Character.AI, which is dealing with lawsuits, together with one from the mom of a 14-year-old who died by suicide after forming an attachment to a chatbot.

The Larger Image

The regulatory stress alerts a reckoning for AI firms that prioritize consumer engagement over consumer security. When chatbots begin cosplaying as therapists, the dangers to susceptible customers—and the potential for regulatory and authorized penalties—change into not possible to disregard.