You’ve in all probability felt that annoying twinge when somebody with authority holds you to guidelines they don’t observe themselves. That’s precisely what occurred to Ella Stapleton, a enterprise main at Northeastern College, who uncovered her professor’s digital hypocrisy spectacularly. The New York Times writes that she filed a proper grievance after discovering the professor used ChatGPT to generate lecture notes and presentation slides, regardless of banning college students from utilizing the instrument

Stapleton wasn’t initially trying to find inconsistencies. She merely seen one thing odd in her organizational habits class notes – an instruction to “develop on all areas” and “be extra detailed and particular.” If that sounds suspiciously like somebody speaking to ChatGPT, that’s as a result of it was.

The Digital Paper Path

Additional digging revealed a treasure trove of AI fingerprints that might make any ChatGPT person blush. The professor’s supplies featured the traditional AI useless giveaways: citations itemizing “ChatGPT” as a supply, these further fingers that AI can’t appear to cease including to human palms in generated pictures, and fonts that mysteriously modified mid-document, fueling contemporary debate round AI replacing educators in methods we by no means imagined.

The kicker? This identical professor, Rick Arrowood, had specific insurance policies forbidding college students from utilizing AI instruments for assignments, with steep penalties for these caught.

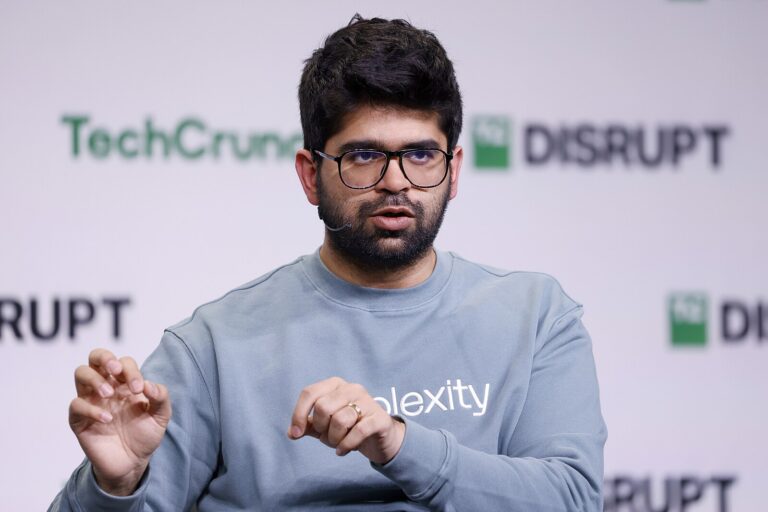

When confronted, Arrowood admitted to utilizing AI instruments together with ChatGPT, Perplexity AI, and Gamma to “refresh” his educating supplies. His response had all of the self-awareness of somebody caught with cookie crumbs whereas implementing a strict no-snacking coverage.

He’s now satisfied professors ought to suppose more durable about utilizing AI and confide in their college students when and the way it’s used — a brand new stance indicating that the debacle was, for him, a teachable second. Arrowood conceded, in what is perhaps the educational understatement of 2025.

Stapleton wasn’t having it. “He’s telling us to not use it, after which he’s utilizing it himself,” she identified, with the righteous indignation of somebody paying over $8,000 for allegedly handcrafted instructional content material.

The Refund Battle

Armed with proof that might make a digital forensics skilled proud, Stapleton filed a proper grievance demanding a full refund. Her argument was easy: you possibly can’t cost premium costs for AI-generated content material whereas concurrently banning college students from the identical instruments.

Regardless of a number of conferences and clear documentation, Northeastern College denied her refund request. Apparently, the irony of implementing totally different requirements for school and college students was misplaced on administration officers.

What makes this conflict notably fascinating is the generational divide it exposes. For Gen Z college students like Stapleton, AI instruments are merely a part of their technological ecosystem, not in contrast to how millennials view smartphones. They anticipate both constant guidelines for everybody or clear acknowledgment of AI’s position in schooling.

This incident isn’t nearly one scholar’s tuition battle. It’s the canary within the coal mine for tutorial establishments struggling to navigate AI integration in schooling.

The answer appears apparent: universities want complete AI insurance policies that apply equally to college students and college. This implies both permitting college students the identical AI entry as professors (with correct attribution) or establishing AI-free zones the place human-generated work is required from everybody. Anything is simply costly hypocrisy wrapped in educational jargon.

As for Stapleton’s $8,000? That is perhaps gone, however her case has already sparked a way more priceless dialog about equity within the AI age.