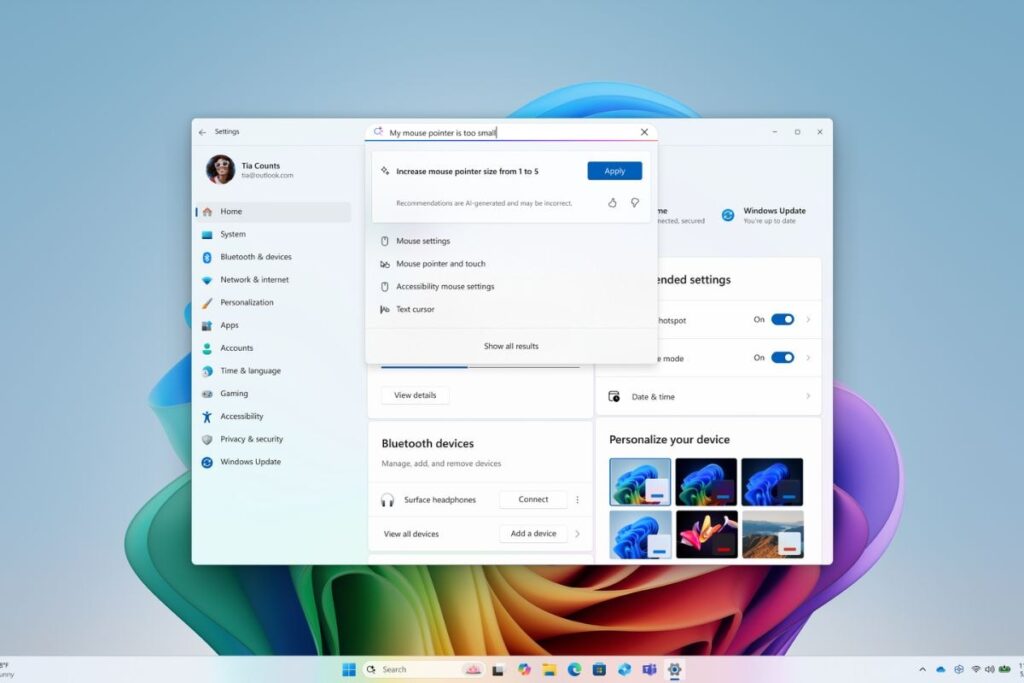

Microsoft has launched Mu, a brand new synthetic intelligence (AI) mannequin that may run regionally on a tool. Final week, the Redmond-based tech big launched new Home windows 11 options in beta, amongst which was the brand new AI brokers function in Settings. The function permits customers to explain what they wish to do within the Settings menu, and makes use of AI brokers to both navigate to the choice or autonomously carry out the motion. The corporate has now confirmed that the function is powered by the Mu small language mannequin (SLM).

Microsoft’s Mu AI Mannequin Powers Brokers in Home windows Settings

In a blog post, the tech big detailed its new AI mannequin. It’s presently deployed totally on-device in appropriate Copilot+ PCs, and it runs on the machine’s neural processing unit (NPU). Microsoft has labored on the optimisation and latency of the mannequin and claims that it responds at greater than 100 tokens per second to satisfy the “demanding UX necessities of the agent in Settings state of affairs.”

Mu is constructed on a transformer-based encoder-decoder structure that includes 330 million token parameters, making the SLM a great match for small-scale deployment. In such an structure, the encoder first converts the enter right into a legible fixed-length illustration, which is then analysed by the decoder, which additionally generates the output.

Microsoft stated this structure was most well-liked as a result of excessive effectivity and optimisation, which is important when functioning with restricted computational bandwidth. To maintain it aligned with the NPU’s restrictions, the corporate additionally opted for layer dimensions and optimised parameter distribution between the encoder and decoder.

Distilled from the corporate’s Phi fashions, Mu was skilled utilizing A100 GPUs on Azure Machine Studying. Sometimes, distilled fashions exhibit larger effectivity in comparison with the mum or dad mannequin. Microsoft additional improved its effectivity by pairing the mannequin with task-specific information and fine-tuning through low-rank adaptation (LoRA) strategies. Curiously, the corporate claims that Mu performs at an identical stage because the Phi-3.5-mini regardless of being one-tenth the dimensions.

Optimising Mu for Home windows Settings

The tech big additionally needed to clear up one other drawback earlier than the mannequin may energy AI brokers in Settings — it wanted to have the ability to deal with enter and output tokens to vary a whole bunch of system settings. This required not solely an unlimited data community but in addition low latency to finish duties virtually instantaneously.

Therefore, Microsoft massively scaled up its coaching information, going from 50 settings to a whole bunch, and used strategies like artificial labelling and noise injection to show the AI how folks phrase widespread duties. After coaching with greater than 3.6 million examples, the mannequin grew to become quick and correct sufficient to reply in underneath half a second, the corporate claimed.

One essential problem was that Mu carried out higher with multi-word queries over shorter or obscure phrases. As an illustration, typing “decrease display screen brightness at night time” offers it extra context than simply typing “brightness.” To resolve this, Microsoft continues to point out conventional keyword-based search outcomes when a question is just too obscure.

Microsoft additionally noticed a language-based hole. In cases when a setting may apply to greater than a single performance (as an example, “improve brightness” may confer with the machine’s display screen or an exterior monitor). To handle this hole, the AI mannequin presently focuses on probably the most generally used settings. That is one thing the tech big continues to refine.