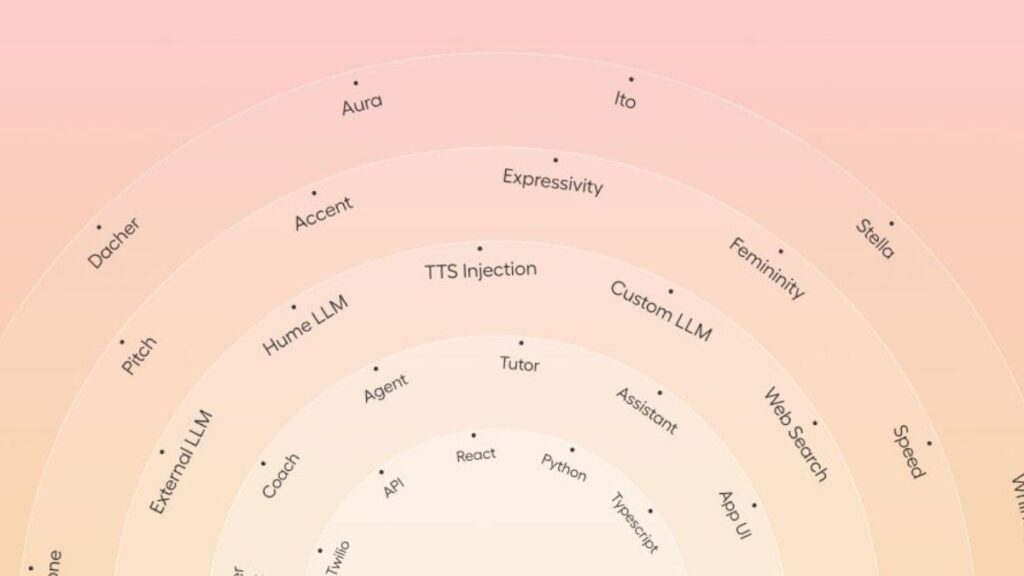

Hume, a New York-based artificial intelligence (AI) agency, unveiled a brand new instrument on Monday that can enable customers to customize AI voices. Dubbed Voice Management, the brand new characteristic is geared toward serving to builders combine these voices into their chatbots and different AI-based purposes. As an alternative of providing a wide variety of voices, the corporate provides granular management over 10 completely different dimensions of voices. By choosing the specified parameters in every of the size, customers can generate distinctive voices for his or her apps.

The corporate detailed the brand new AI instrument in a blog post. Hume said that it’s making an attempt to unravel the issue of enterprises discovering the correct AI voice to match their model id. With this characteristic, customers can customise completely different features of the notion of voice and permit builders to create a extra assertive, relaxed, or buoyant voice for AI-based purposes.

Hume’s Voice Management is at the moment accessible in beta, however it may be accessed by anybody registered on the platform. Devices 360 employees members have been in a position to entry the instrument and take a look at the characteristic. There are 10 completely different dimensions builders can alter together with gender, assertiveness, buoyancy, confidence, enthusiasm, nasality, relaxedness, smoothness, tepidity, and tightness.

As an alternative of including a prompt-based customisation, the corporate has added a slider that goes from -100 to +100 for every of the metrics. The corporate said that this method was taken to eradicate the vagueness related to the textual description of a voice and to supply granular management over the languages.

In our testing, we discovered altering any of the ten dimensions makes an audible distinction to the AI voice and the instrument was in a position to disentangle the completely different dimensions accurately. The AI agency claimed that this was achieved by growing a brand new “unsupervised method” which preserves most traits of every base voice when particular parameters are various. Notably, Hume didn’t element the supply of the procured information.

Notably, after creating an AI voice, builders must deploy it to the appliance by configuring its Empathic Voice Interface (EVI) AI mannequin. Whereas the corporate didn’t specify, the EVI-2 mannequin was doubtless used for this experimental characteristic.

Sooner or later, Hume plans to develop the vary of base voices, introduce further interpretable dimensions, improve the preservation of voice traits underneath excessive modifications, and develop superior instruments to analyse and visualise voice traits.

Catch the newest from the Shopper Electronics Present on Devices 360, at our CES 2025 hub.